Ragione e sentimento nell’Antropocene: perché abbiamo bisogno di integrare arte e scienza per un futuro sostenibile

Giulia Isetti

Giulia Isetti

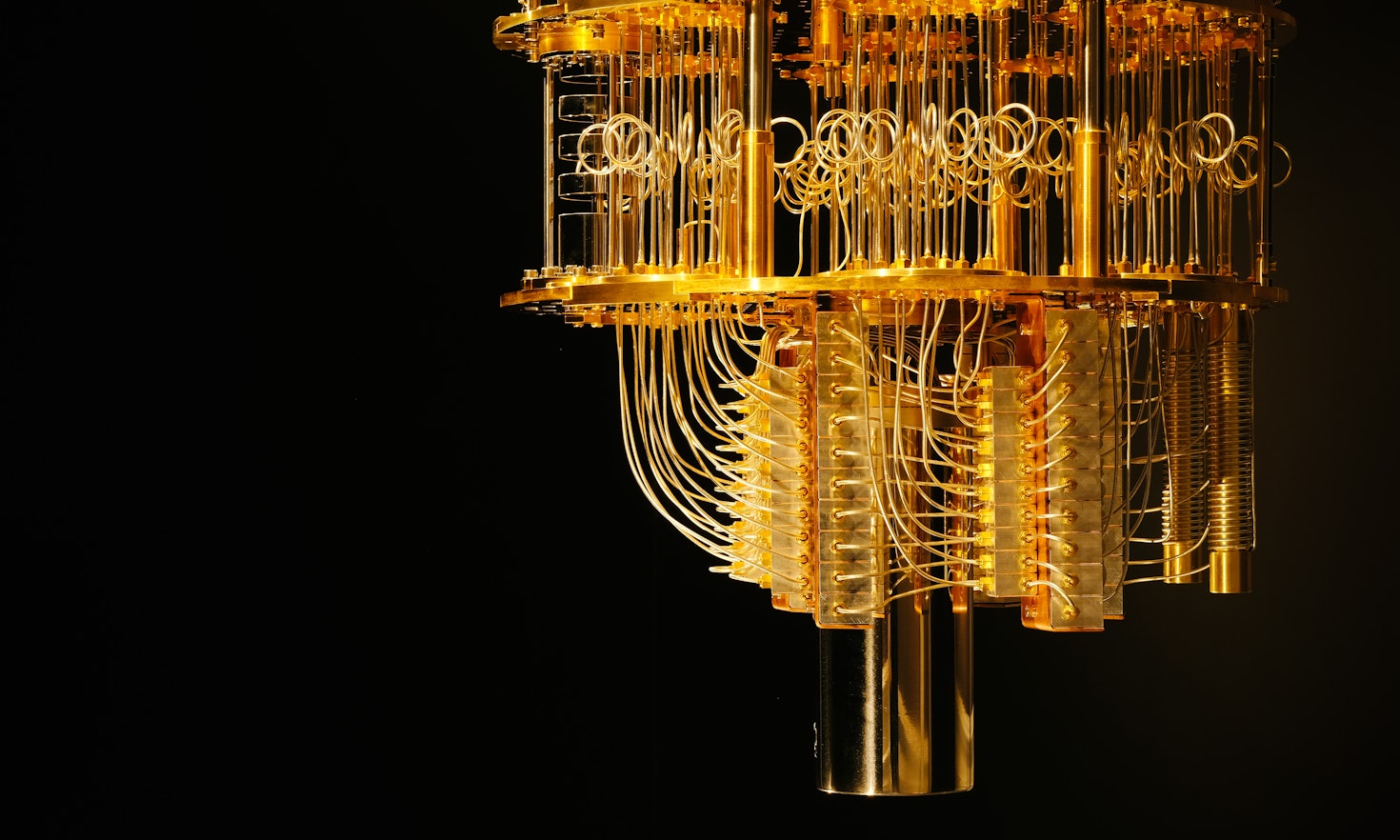

Current AI is far from perfect, and politics has not fully addressed what happens when it goes wrong. Quantum Computing revolutionises not only AI’s speed and accuracy, but we could weave trust into it while it is still in its infancy.

Artificial Intelligence (AI) is unquestionably affecting most people's lives today. When people think of AI, it often comes in the form we see in films like Terminator (1984), 2001: A Space Odyssey (1968), and Ex Machina (2014). It is unsurprising, then, that AI is often not only looked at in terms of a threat but also personified, often in anthropomorphic form. However, despite growing concerns surrounding AI in the form of killer robots, most AI we encounter in our day-to-day lives take a less ominous form.

In medicine alone, we can find a host of AI applications ranging from diagnostic systems that help doctors make diagnoses and help recommend treatment options to monitoring systems to help doctors guide patients to improve their health outcomes. Wall Street is also no stranger to AI systems. Gone are the days when the best and most trades are made by brokers yelling at the New York Stock Exchange. Nowadays, large firms who can afford fast trading algorithm systems can make a vast number of exchanges, far outstripping what any human person could feasibly be capable of. However, like in most cases, the costs of such systems are prohibitive and only accessible to those with the economic means to purchase them, giving them an even more significant asymmetric advantage; to those who have everything, everything will be given!

AI, then, can bring increasable benefits, but also induce a host of harms to society. We need to look no further than science fiction to push our imaginations to what AI can do in the far future. However, we are already witnessing some of the poor outcomes of putting AI into the world. Google had to apologize for the results of using its Vision Cloud, a computer vision service, which miscategorized images of dark-skinned people compared to those of their light-skinned compatriots. For example, images, where a dark-skinned person was holding a thermometer gun, were categorized as a ‘gun’ whereas the same image where it was a light-skinned person, the thermometer was categorized as an ‘electronic device’. This is one of several examples of AI going bad.

But we do not need to go far afield into specific domains to find AI systems at work; most people reading this article, if it wasn’t archaically printed out and handed to them, are reading this on their computer, or, more probably, on their smartphone. But what makes their phones smart? AI systems, mainly in the form of machine learning algorithms, can learn from feedback from their environment and inputs to make better decisions. Fundamentally though, these systems are built using computers, something that is ridiculously apparent and often taken for granted and overlooked given the paradigm shift towards quantum computing that is currently taking place in the realm of computing. What happens to computing more generally will undoubtedly have impacts on what happens in the field of AI, and, subsequently, what impacts those AI systems will have on society at large.

To a large degree, regional institutions like the European Union have taken AI seriously, acknowledging the tremendous benefits that AI systems could bring to individuals, society, and even the environment at large. However, the ability of these systems to make autonomous decisions often brings with it a host of ethical issues, like who is responsible if a system makes a catastrophic decision, like those discussed in debates on the ability of autonomous weapons to make life and death decisions. The European Union’s Ethics guidelines for trustworthy AI lays out seven ethical principles for achieving such trustworthy AI. Although this guideline is not without its critiques, it touches on many of the important values that have continually emerged in discussions of how we can build AI systems that we can trust to make decisions that align with our moral values. Even further, the EU has gone beyond this to propose legislation to govern the design and use of AI systems among member states, acknowledging the different levels of risks that different types of AI systems can pose.

Both approaches are necessary, but not sufficient conditions for long-term trustworthy AI. Why? They touch on important and potentially effective ways to ensure that we build AI that aligns with our values, but what is assumed is that the underlying architecture of these systems remains the same. What I mean is how such AI systems are built on classical computers, the types of computers that we are all familiar with that essentially make binary decisions of 1s and 0s. This is unsurprising given that, up until very recently at least, that is all the types of computers we have had at our disposal and the only types for which AI has been designed and engineered.

So, what is changing then concerning computing, and what does it mean for the future of AI? There is a new type of computing that has recently emerged: quantum computing. Using the rules of physics, primarily quantum mechanics, quantum computing can tackle vast amounts of data and make calculations that classical computers simply cannot handle. In essence, quantum computing allows 1s and 0s to be assimilated simultaneously, rather than as mutually-exclusive binaries. This seems unintuitive, and it is. At the level of quantum mechanics, physics allows for a concept of superposition to take place. In this case, binary 1s and 0s can be collapsed into 1s and 0s at the same time.

Traditionally, scientists, when needing to crunch huge data sets, often, and still do, turn to supercomputers in order to solve their mathematical quandaries. However, this does not mean that all problems can be solved, or at least not easily, by supercomputers. When a supercomputer struggles, it's often because the large classical machine was given a challenging problem to answer. Complexity is commonly to blame for the failure of traditional computers. Multiple variables that interact in intricate ways are considered complex problems. For example, something seemingly simple, like trying to model an atom in a molecule chain, is actually incredibly complex, and existing supercomputers struggle to actually model how the individual electrons of any given atom actually behave and interact. All the various possibilities require a gargantuan working memory that even supercomputers do not possess.

Quantum computers change all this by approaching the problem of complexity by finding patterns, rather than through brute computation. The science behind how these still rudimentary quantum computers is increasingly complex, and, in many cases, unintuitive when compared to classical computers. Still, harnessing the laws of quantum mechanics in computing has already proven to be an effective way at handling complexity.

Quantum computing promises to usher in an era of unparalleled speeds and computing power. Practitioners have already theorised that quantum computing would be able to augment how AI systems function. IBM and Google continue to work on the narrative of quantum computing’s significant impact on AI. However, given the plethora of ethical, social, legal, and governance issues, among others, that continue to be discussed and debated concerning AI, theorists argue that such issues will be exacerbated by the introduction of quantum computing to the field of AI given that the physics underlying quantum computing added a further layer of incomprehensibility and power. However, perhaps we can have trustworthy AI because of quantum computing.

The ability for the system to be explicable does not necessarily mean that it has to be transparent, something that is often conflated with explicability.

Steven Umbrello

There is a host of uncertainty as to whether the mathematical-statistical models applied in the field of AI can work with those of quantum computing which use complex numbers that are bound to the external rules of quantum physics. Some have argued that the inherent complexity and incomprehensibility of the decision-making pathways that lead to behaviours on the part of quantum AI systems automatically exclude explicability and, therefore, trust. But maybe this is not actually the case. The ability for the system to be explicable does not necessarily mean that it has to be transparent, something that is often conflated with explicability. If we imagine that, we want to know why a classically built AI decided to make a stock trade on company A rather than B. We can take a look at the system and try to understand the decision-making pathway that it took. The amount of data would be so gargantuan that even if we had all of the information and lines of code, it would be nigh impossible for us to sufficiently decipher the actual why. The system here can be fully transparent, but functionally meaningless to what we want.

Quantum computing can function by a similar analogy while still providing amazing speeds and computation power. How? The underlying physical properties of quantum computing remain mysterious to us; things like quantum entanglement, what Einstein called “spooky action at a distance”, need not be construed as a barrier to building trustworthy systems on quantum computers. Quantum computers, despite still being in their infancy and not widely adopted, possess the ability to produce incredibly reliable statistical outputs. That, in and of itself, provides a strong basis for how much trust we delegate any given quantum AI system to make. Likewise, rather than waiting for more problems to emerge, at which point it will be too late to make any real change, quantum computing’s infancy gives us the perfect opportunity to explore different ways to build trustworthy AI on quantum computers safely within controlled contexts.

Quantum computing is something novel, but it promises to bring with it a host of benefits that are simply impossible to replicate on classical computers. It is not hard to imagine what AI systems can be built and whether or not the ethical, social, economic, and environmental issues of those systems will be exacerbated. It makes sense to tackle these issues now before they become intractable. Quantum AI can, by its very nature, be trustworthy, but it has to be designed explicitly for that trust for it to be meaningful in any real sense, and for us humans to be responsible for the decision-making power we delegate and data we feed in to those systems.

Each year, two Stiftung Südtiroler Sparkasse Global Fellowships are awarded. The Fellows are offered the opportunity to work closely with the interdisciplinary team at the Center for Advanced Studies on topics of both global and glocal relevance, linking personal experiences and research and chosen geopolitical areas with the Center’s expertise.

The Global Fellowships are funded by the Stiftung Südtiroler Sparkasse / Fondazione Cassa di Risparmio di Bolzano.

This content is licensed under a Creative Commons Attribution 4.0 International license.

Giulia Isetti

Giulia Isetti

Karoline Irschara

Karoline Irschara

Mirjam Gruber

Mirjam Gruber